A/B testing for direct mail campaigns helps you make better decisions by comparing two mailer versions to see which performs best. It replaces guesswork with data, showing what drives results. Start with a control version, tweak one element, and test it on random audience segments. Track metrics like response rate, conversions, and ROI using tools like QR codes, promo codes, and personalized URLs.

Key takeaways:

- Test one variable at a time (e.g., headline, offer, design).

- Define clear goals (e.g., increase response rate by 3.5%).

- Use reliable tracking methods (e.g., match-back analysis or unique phone numbers).

- Analyze results carefully to identify what works.

- Iterate continuously to refine and improve over time.

How 50+ A/B Tests Led iExit From Postcards to a High-Converting Letter

Setting Goals and Choosing Metrics for Success

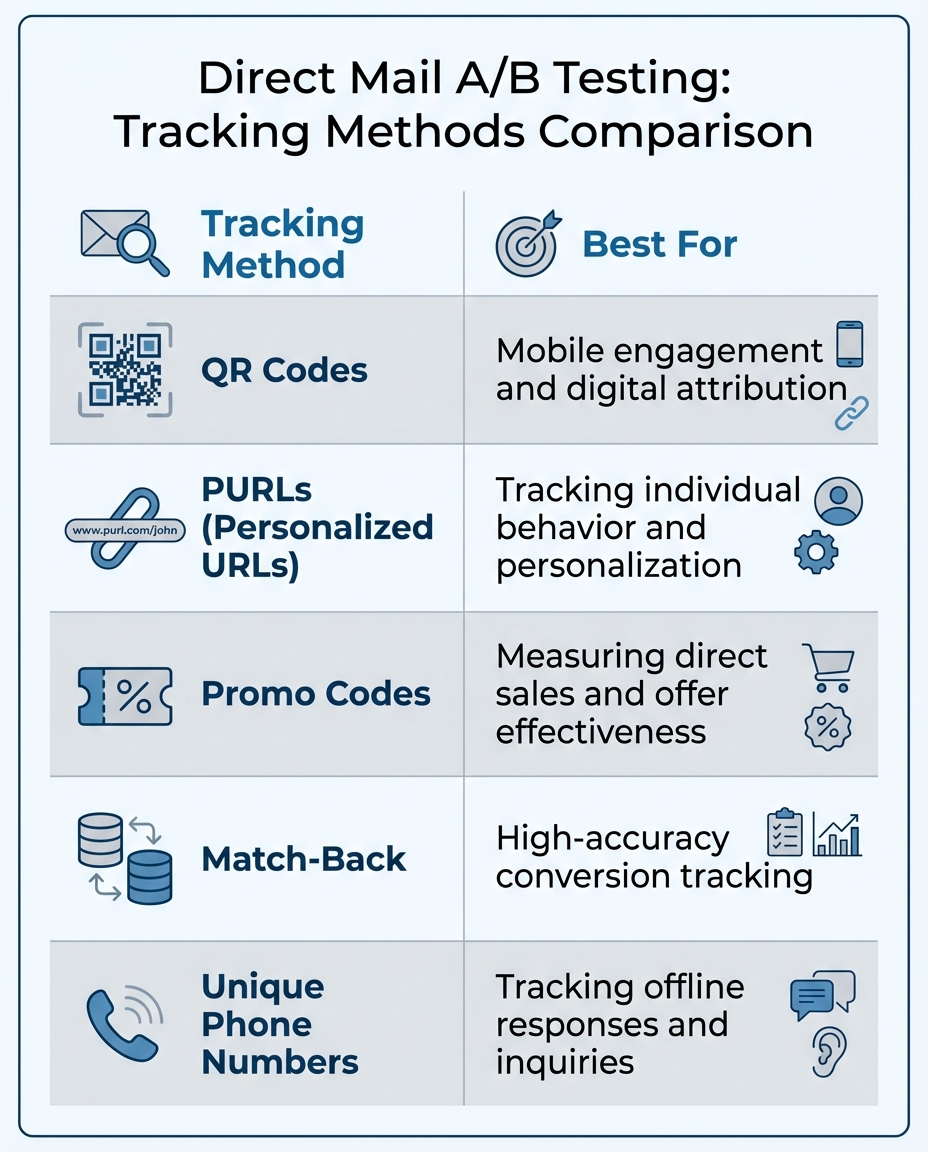

Direct Mail A/B Testing Tracking Methods Comparison

Before sending out your campaign, it’s crucial to define what success looks like. Start by reviewing data from previous campaigns to pinpoint areas needing improvement. For instance, maybe your last mailer had a strong response rate but fell short on conversions, or perhaps the overall ROI didn’t meet expectations. This kind of analysis provides a baseline to help you focus on where changes are necessary.

With this baseline in mind, you can set clear, focused goals for your campaign.

Defining Your Campaign Goals

Establish specific, measurable objectives before launching your campaign. Instead of vague targets like "boost sales", aim for numbers you can track – such as 500 website visits, $25,000 in sales, or a 3.5% response rate. As Dawn Burke, Marketing Manager at Suttle-Straus, explains:

Without having a goal in mind, it will be difficult to know which variables to test.

Your goals should guide your testing strategy. Use your CRM system – whether it’s Salesforce, Marketo, or another tool – to analyze customer data and make informed guesses about which segments will respond best. For example, if your data shows that a specific group reacts positively to certain types of offers, use that insight to create test variations tailored to them.

Key Metrics to Track for A/B Testing

The metrics you track should tie directly to your campaign goals. For starters, the response rate measures how many recipients took any action, giving you a sense of initial engagement. Conversion rate goes a step further, showing the percentage of recipients who completed your desired action, like making a purchase or signing up. To evaluate the campaign’s financial success, track revenue generated and ROI.

Accurate data collection is key, so set up tracking mechanisms from the beginning. Use tools like unique QR codes, personalized URLs (PURLs), promo codes, or dedicated phone numbers for each test variation. These tools help you identify which version prompted specific responses. Additionally, match-back processing can compare your mailing list to your customer database, confirming which recipients converted during the campaign.

| Tracking Method | Best For |

|---|---|

| QR Codes | Mobile engagement and digital attribution |

| PURLs | Tracking individual behavior and personalization |

| Promo Codes | Measuring direct sales and offer effectiveness |

| Match-Back | High-accuracy conversion tracking |

| Unique Phone Numbers | Tracking offline responses and inquiries |

Designing and Implementing Test Variations

Once you’ve set clear campaign goals, the next step is crafting test variations that provide meaningful insights. The trick lies in deciding which elements to test and organizing your test groups in a way that delivers reliable results.

What to Test in Direct Mail Campaigns

Nearly every aspect of your direct mail piece can be tested, but some components have a bigger influence than others. For example, testing copy and messaging can be as simple as tweaking headlines or adding personalization, such as the recipient’s name, location, or purchase history. You can also experiment with call-to-action (CTA) wording, length, and frequency – like comparing a single "Buy Now" button to multiple options.

Design elements often play a huge role in response rates. Try switching between custom images and stock photos, illustrations and photographs, or bold and muted color schemes. Similarly, format and packaging can make a difference. Oversized envelopes, for instance, often outperform standard letter-sized ones. You can also test different dimensions, such as 4" x 6" versus 6" x 9" postcards or #10 envelopes versus self-mailers.

Your offer structure is another area worth exploring. The way you present value matters – a 20% discount might resonate differently than a $5 off deal, even if the savings are identical. Additionally, testing response channels can be revealing. For instance, compare QR codes with promo codes or test Personalized URLs (PURLs) against a generic landing page.

Once you’ve decided what to test, the next step is structuring your test groups properly.

Creating Effective Test Groups

The cornerstone of A/B testing is straightforward: test only one variable at a time. As Mike Gunderson, Founder and President of Gundir, explains:

It’s only effective when isolating a single variable to test in the creative.

If you change multiple elements at once, it becomes impossible to pinpoint which adjustment caused the difference in response.

Start with a control (Version A), then create Version B by changing just one element while keeping everything else constant. Divide your audience into evenly sized, random segments to ensure the results are statistically valid. Keep in mind that larger test groups yield more reliable results – a group of 100,000 will produce more accurate insights than one with just 1,000 recipients.

Finally, ensure your test variations are printed with precision. For high-quality digital and offset printing, along with in-house design, bindery, and mailing services, you might want to explore working with Miro Printing & Graphics Inc., a full-service print shop based in Hackensack, NJ.

sbb-itb-ce53437

Collecting and Analyzing Data

Once your goals and test designs are in place, the next step is diving into data analysis. This is where you fine-tune your direct mail strategy by turning raw data into actionable insights. After your test variations are delivered, it’s all about gathering accurate information to see what works – and what doesn’t.

Tracking Responses and Engagement

Start by assigning unique identifiers to each test variation. This allows you to trace exactly which version led to each response. For example, Personalized URLs (PURLs) can include the recipient’s name or reference a specific product, making it easy to track individual behavior and guide users to tailored landing pages. Similarly, QR codes can track website visits, scan times, and even location data – offering a detailed view of engagement.

If conversions are your primary focus, unique promo codes are a great way to measure redemption rates and figure out which offers struck a chord with your audience. For campaigns targeting audiences less inclined toward online interactions, consider using dedicated phone numbers for each variation. This lets you track call volume and duration, providing another layer of insight. Ultimately, your tracking methods should align with your campaign goals, whether that’s driving website visits, boosting sales, or increasing overall conversions.

To get the full picture, integrate your tracking data with platforms like Salesforce or Marketo. This integration connects offline direct mail efforts with digital engagement, giving you a comprehensive understanding of customer behavior and how they interact post-mail.

Once your tracking system is in place, you can move on to evaluating the results and identifying performance trends.

Analyzing Test Results

After collecting your data, focus on the quality of conversions rather than just the sheer volume of responses. A high response rate might look impressive, but it doesn’t mean much if it doesn’t lead to actual sales. For instance, offering a gift card might generate more responses initially, but a white paper offer could lead to better conversion-to-sale rates by attracting more qualified leads.

To get meaningful insights, compare your test variations against a proven control to establish a baseline for performance. Make sure your sample size is large enough to minimize variability. For example, in a campaign with a 2% response rate, mailing 10,000 pieces gives you a confidence range of 1.73% to 2.27%. However, mailing only 2,000 pieces increases the variability, with a range of 1.38% to 2.62%. To ensure your results are statistically sound, use online tools to calculate whether differences in performance are due to your test variable or just random chance. If the differences are too small to draw conclusions, it’s worth retesting the same element before making any final decisions.

Mike Gunderson, Founder and President of Gundir, puts it best:

Testing is an ongoing process that continuously refines marketing initiatives.

When you identify a winning variation, it becomes your new control. This sets the foundation for future tests and keeps your strategy evolving through small, steady improvements.

Scaling and Optimizing Your Campaigns

Scaling Successful Variations

When you’ve pinpointed a winning variation, the next step is to scale it carefully to a larger audience. Start by designating this winning piece as your new control, which will serve as the benchmark for all future tests. This ensures you have a reliable foundation for measuring performance as you continue to refine your campaigns.

Before you roll it out on a broader scale, take the time to backtest your winning variation and conduct a matchback analysis. This step will help confirm that your results are statistically sound and not just a fluke.

As you scale, focus on growing your mailing list and reaching new audience segments, but make sure to retain the elements that made the original variation successful. Keep in mind that direct mail has a typical read period of 60 to 90 days, so allow enough time to collect meaningful data before making further adjustments.

This process of scaling and validating your success sets the stage for continuous improvement, creating a feedback loop that drives better results over time.

Iterative Testing for Long-Term Success

Testing isn’t a one-and-done task – it’s an ongoing process. As David Ogilvy wisely said:

Never stop testing, and your advertising will never stop improving.

Consumer behavior is always evolving, which means your approach needs to evolve too. That’s why systematic, iterative testing is key to staying ahead.

Develop a testing roadmap to guide your efforts. This roadmap should outline the specific elements you plan to test, the order in which you’ll test them, and the scale of each test. Generally, it’s best to prioritize testing in this sequence: mailing list, offer, and then creative elements. For example, once you’ve nailed down a winning headline, shift your focus to testing other variables like imagery or the call-to-action. This step-by-step approach will help you refine your mailers to near perfection.

Erik Koenig, President & Chief Strategist at SeQuel Response, puts it perfectly:

The end goal of a direct mail test is knowledge, not profit. Regardless of the outcome, there are no wasted results.

To strike the right balance, mix incremental A/B tests for gradual improvements with occasional “beat the control” tests to uncover bold new ideas. Additionally, amplify your successful mail variations by integrating them with digital channels like retargeting ads or Connected TV. This multi-channel approach can increase both frequency and reach.

By treating testing as a continuous cycle, you’ll ensure your campaigns remain relevant, engaging, and effective over time.

For expert execution of your scaled direct mail campaigns, check out the comprehensive printing and mailing services offered by Miro Printing & Graphics Inc..

Conclusion

A/B testing takes the guesswork out of direct mail campaigns, replacing it with clear, data-backed insights. Instead of relying on intuition, you gain solid evidence about what truly connects with your audience.

However, it’s crucial to approach testing with discipline and focus. Karen Loggia emphasizes this by warning that skipping proper testing can lead to repeated mistakes and limit your return on investment (ROI). To avoid this, keep your testing process simple and targeted. Focus on one variable at a time – whether it’s the offer, headline, or format – so you can clearly identify what’s driving the results. Reliable tracking methods are essential to gather precise data and make informed decisions.

Also, testing isn’t something you do once and forget. It’s a continuous process of refining and improving. Regular testing helps combat audience fatigue and ensures your campaigns stay effective over time.

FAQs

What should I test first in my direct mail campaign?

To pick the right variable to test first in your direct mail campaign, start by setting a clear, measurable goal. This could be anything from boosting website traffic to increasing phone inquiries or driving sales. A well-defined objective helps you gauge the success of your efforts. Then, take a close look at past campaign data to pinpoint elements that had the most noticeable impact – things like specific offers or audience segments. From there, zero in on one high-impact variable that’s easy to tweak and likely to influence results, such as the offer itself or the mailing list segment.

Partner with a reliable printer, like Miro Printing & Graphics Inc., to ensure your test materials are produced accurately and on time. Keep all other parts of the mailer consistent so you can clearly see how the tested variable affects outcomes. When your materials are ready, use tools like unique URLs, QR codes, or coupon codes to track responses. Make sure to send your test mailers to a randomly selected, evenly split sample of your audience for accurate and reliable data. Following these steps will help you run a focused and cost-efficient test, providing actionable insights for your campaign.

What are the best ways to track responses in A/B testing for direct mail campaigns?

Tracking responses in A/B testing for direct mail campaigns is crucial to understanding what works and what doesn’t. Here are some practical ways to monitor engagement effectively:

- Personalized URLs (PURLs): Assign a unique web address to each recipient. This allows you to see exactly who visited and interacted with your campaign online.

- QR Codes: Include scannable codes that direct recipients to specific landing pages. They’re quick, user-friendly, and great for tracking responses.

- Unique Discount Codes: Use custom promo codes for each campaign version. Tracking redemption rates will give you valuable insights into which version performed better.

- Dedicated Phone Numbers: Set up separate phone numbers for different test versions. This makes it easy to measure call responses tied to each variation.

These methods provide clear data, helping you analyze campaign results and refine your strategy for future mailings.

How can I make sure my A/B test results are reliable and meaningful?

To make sure your A/B test delivers trustworthy results, begin with a randomly divided sample group that’s big enough to yield meaningful data. Set a clear, measurable goal upfront – whether that’s tracking the response rate, conversion rate, or ROI – so you know exactly what you’re evaluating. Let the test run long enough to gather enough data, avoiding the temptation to jump to conclusions too early. Lastly, aim for a confidence level of 95% or higher to ensure that any differences you see are statistically valid and not just random noise.

Related Blog Posts

- 8 Metrics to Track Direct Mail Success

- How to Align Print and Digital Marketing

- 10 Budget Mistakes in Direct Mail Campaigns

- Common Print Marketing Mistakes That Waste Money

https://app.seobotai.com/banner/banner.js?id=694b2f1c12e0ddc125f2167d